Stripping Russian syllabic stress marks in Python

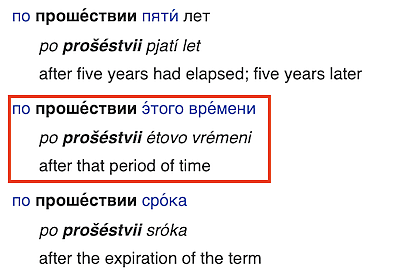

I have written previously about stripping syllabic stress marks from Russian text using a Perl-based regex tool. But I needed a means of doing in solely in Python, so this just extends that idea.

#!/usr/bin/env python3

def strip_stress_marks(text: str) -> str:

b = text.encode('utf-8')

# correct error where latin accented ó is used

b = b.replace(b'\xc3\xb3', b'\xd0\xbe')

# correct error where latin accented á is used

b = b.replace(b'\xc3\xa1', b'\xd0\xb0')

# correct error where latin accented é is used

b = b.replace(b'\xc3\xa0', b'\xd0\xb5')

# correct error where latin accented ý is used

b = b.replace(b'\xc3\xbd', b'\xd1\x83')

# remove combining diacritical mark

b = b.replace(b'\xcc\x81',b'').decode()

return b

text = "Том столкну́л Мэри с трампли́на для прыжко́в в во́ду."

print(strip_stress_marks(text))

# prints "Том столкнул Мэри с трамплина для прыжков в воду."The approach is similar to the Perl-based tool we constructed before, but this time we are working working on the bytes object after encoding as utf-8. Since the bytes object has a replace method, we can use that to do all of the work. The first 4 replacements all deal with edge cases where accented Latin characters are use to show the placement of syllabic stress instead of the Cyrillic character plus the combining diacritical mark. In these cases, we just need to substitute the proper Cyrillic character. Then we just strip out the “combining acute accent” U+301 → \xcc\x81 in UTF-8. After these replacements, we just decode the bytes object back to a str.

Edit:

A little later, it occurred to me that there might be an easier way using the regex (not re) module which does a better job handling Unicode. So here’s a version of the strip_stress_marks function that doesn’t involve taking a trip through a bytes object and back to string:

def strip_stress_marks(text: str) -> str:

# correct error where latin accented ó is used

result = regex.sub('\u00f3','\u043e', searchText)

# correct error where latin accented á is used

result = regex.sub('\u00e1','\u0430', result)

# correct error where latin accented é is used

result = regex.sub('\u00e9','\u0435', result)

# correct error where latin accented ý is used

result = regex.sub('\u00fd','\u0443', result)

# remove combining diacritical mark

result = regex.sub('\u0301', "", result)

return resultI thought this might be faster, but instead using the regex module is about an order of magnitude slower. Oh well.

By compiling the regex, you can reclaim most of the difference, but the method using regular expressions is still about twice as slow as the approach of using the bytes object manipulation. For completeness, here is the version using compiled regular expressions:

o_pat = regex.compile(r'\u00f3')

a_pat = regex.compile(r'\u00e1')

e_pat = regex.compile(r'\u00e9')

y_pat = regex.compile(r'\u00fd')

diacritical_pat = regex.compile(r'\u0301')

def strip_stress_marks3(text: str) -> str:

# correct error where latin accented ó is used

result = o_pat.sub('\u043e', searchText)

# correct error where latin accented á is used

result = a_pat.sub('\u0430', result)

# correct error where latin accented é is used

result = e_pat.sub('\u0435', result)

# correct error where latin accented ý is used

result = y_pat.sub('\u0443', result)

# remove combining diacritical mark

result = diacritical_pat.sub("", result)

return result