Linguistics

Factor analysis of failed language cards in Anki

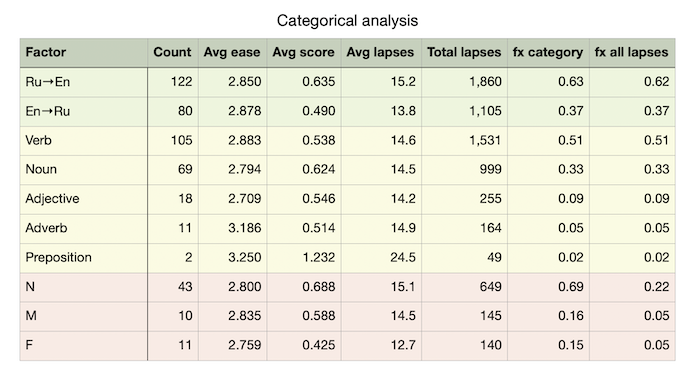

After developing a rudimentary approach to detecting resistant language learning cards in Anki, I began teasing out individual factors. Once I was able to adjust the number of lapses for the age of the card, I could examine the effect of different factors on the difficulty score that I described previously.

Findings

Some of the interesting findings from this analysis:

- Prompt-answer direction - 62% of lapses were in the Russian → English (recognition) direction.1

- Part of speech - Over half (51%) of lapses were among verbs. Since the Russian verbal system is rich and complex, it’s not surprising to find that verb cards often fail.

- Noun gender - Between a fifth and a quarter (22%) of all lapses were among neuter nouns and among failures due to nouns only, neuter nouns represented 69% of all lapses. This, too, makes intuitive sense because neuter nouns often represent abstract concepts that are difficult to represent mentally. For example, the Russian words for community, representation, and indignation are all neuter nouns.

Interventions

With a better understanding of the factors that contribute to lapses, it is easier to anticipate failures before they accumulate. For example, I will immediately implement a plan to surround new neuter nouns with a larger variety of audio and sample sentence cards. For new verbs, I’ll do the same, ensuring that I include multiple forms of the verb, varying the examples by tense, number, person, aspect and so on.

Refactoring Anki language cards

Removing stress marks from Russian text

Previously, I wrote about adding syllabic stress marks to Russian text. Here’s a method for doing the opposite - that is, removing such marks (ударение) from Russian text.

Although there may well be a more sophisticated approach, regex is well-suited to this task. The problem is that

def string_replace(dict,text):

sorted_dict = {k: dict[k] for k in sorted(dict)}

for n in sorted_dict.keys():

text = text.replace(n,dict[n])

return text

dict = { "а́" : "а", "е́" : "е", "о́" : "о", "у́" : "у",

"я́" : "я", "ю́" : "ю", "ы́" : "ы", "и́" : "и",

"ё́" : "ё", "А́" : "А", "Е́" : "Е", "О́" : "О",

"У́" : "У", "Я́" : "Я", "Ю́" : "Ю", "Ы́" : "Ы",

"И́" : "И", "Э́" : "Э", "э́" : "э"

}

print(string_replace(dict, "Существи́тельные в шве́дском обычно де́лятся на пять склоне́ний."))This should print: Существительные в шведском обычно делятся на пять склонений.

Escaping "Anki hell" by direct manipulation of the Anki sqlite3 database

There’s a phenomenon that verteran Anki users are familiar with - the so-called “Anki hell” or “ease hell.”

Origins of ease hell

The descent into ease hell has to do with the way Anki handles correct and incorrect answers when it presents cards for review. Ease is a numerical score associated with every card in the database and represents a valuation of the difficulty of the card. By default, when cards graduate from the learning phase, an ease of 250% is applied to the card. If you continue to get the card correct, then the ease remains at 250% in perpetuity. As you see the card at its increasing intervals, the ease will remain the same. All good. Sort of.

Typing Russian stress marks on macOS

While Russian text intended for native speakers doesn’t show accented vowel characters to point out the syllabic stress (ударение) , many texts intended for learners often do have these marks. But how to apply these marks when typing?

Typically, for Latin keyboards on macOS, you can hold down the key (like long-press on iOS) and a popup dialog will show you options for that character. But in the standard Russian phonetic keyboard it doesn’t work. Hold down the e key and you’ll get the option for the letter ë (yes, it’s regarded as a separate letter in Russian - the essential but misbegotten ë .)

A macOS text service for morphological analysis and in situ marking of Russian syllabic stress

Building on my earlier explorations of the UDAR project, I’ve created a macOS Service-like method for in-situ marking of syllabic stress in arbitrary Russian text. The following video shows it in action:

The Keyboard Maestro is simple; we execute the following script, bracketed by Copy and Paste:

Beginning to experiement with Stanza for natural language processing

After installing Stanza as dependency of UDAR which I recently described, I decided to play around with what is can do.

Installation

The installation is straightforward and is documented on the Stanza getting started page.

First,

sudo pip3 install stanzaThen install a model. For this example, I installed the Russian model:

#!/usr/local/bin/python3

import stanza

stanza.download('ru')Usage

Part-of-speech (POS) and morphological analysis

Here’s a quick example of POS analysis for Russian. I used PrettyTable to clean up the presentation, but it’s not strictly-speaking necessary.

Automated marking of Russian syllabic stress

One of the challenges that Russian learners face is the placement of syllabic stress, an essential determinate of pronunciation. Although most pedagogical texts for students have marks indicating stress, practically no tests intended for native speakers do. The placement of stress is inferred from memory and context.

I was delighted to discover Dr. Robert Reynolds’ work on natural language processing of Russian text to mark stress based on grammatical analysis of the text. What follows is a brief description of the installation and use of this work. The project page on Github has installation instructions; but I found a number of items that needed to be addressed that were not covered there. For example, this project (UDAR) depends on Stanza; which in turn requires a language-specific (Russian) model.

Language word frequencies

Since one of the cornerstones of my approach to learning the Russian language has been to track how many words I’ve learned and their frequencies, I was intrigued by reading the following statistics today:

- The 15 most frequent words in the language account for 25% of all the words in typical texts.

- The first 100 words account for 60% of the words appearing in texts.

- 97% of the words one encounters in a ordinary text will be among the first 4000 most frequent words.

In other words, if you learn the first 4000 words of a language, you’ll be able to understand nearly everything.